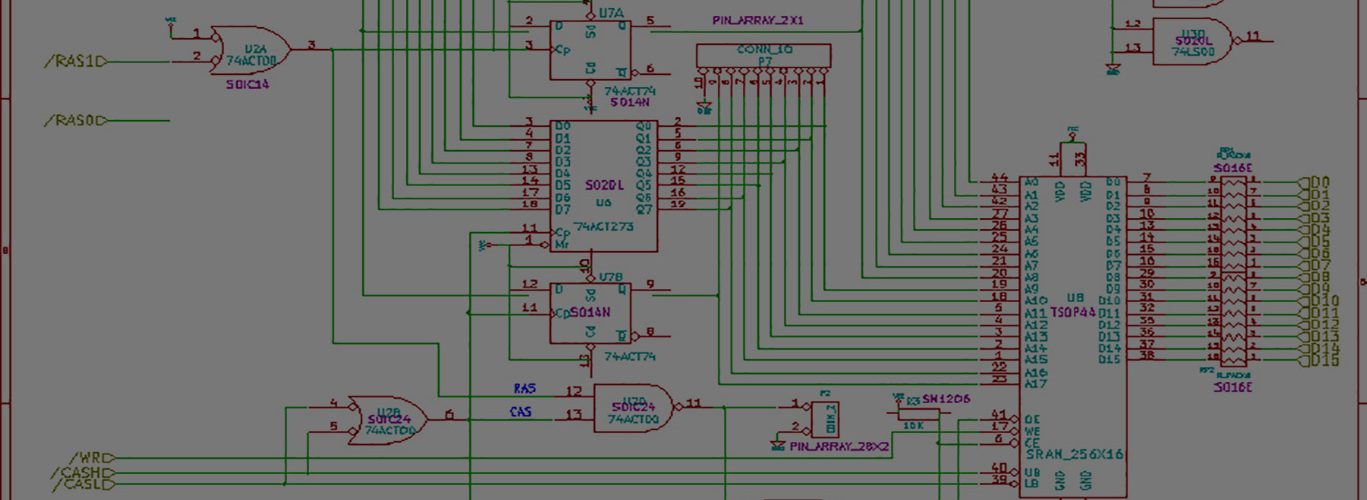

So I’ve been trying to optimize a freeware memory controller for my particular memory chip.

I’m running into this situation where the DRAM to ALTRAM copy takes upwards of 24us per line. But I only have a total of 32us per line. So my “free” memory time is limited to about 8us. I was _really_ trying to get the copy down pretty low so the “rest” of the memory time could be spent writing from the amiga to the dram.

I’m running into weird problems though. First off, sometimes the copy exceeds the time per horizontal line, and I can’t figure out wtf that is. It’s not so simple that it’s a simple bank change or something that causes a little extra delay.

Furthermore, everything seems to be working. No graphics flaws that I can see. I would expect to see randomly repeated rows or something.

I took the controller from 133mhz to 166mhz, and I see some improvement there. The controller does not wait tRCD when it can, but waits tRC all the time. I tried changing the value to the tRCD from the datasheet, and that does really speed things up, but anytime there is a bank change, then there’s an associated error.

I’m beginning to think that the highest payoff in optimizing the controller is the burst size, because there seems to be much overhead with each read request. When you are running at 166mhz, data is coming in pretty damn fast from the memory, and each added 16bits width comes in at the clock rate. Since your overhead would be fixed, doubling the amount of data received would allow for more efficient transfer.

The controller has a fixed burst size of 2 — and I’ll need to modify controller a fair bit to support it. What especially makes my job harder is that the order of the data changes based on the most significant portions of the column address. So accesses to a CA of 00xxxx- has order 0-1-2-3, but CA of 11xxxx- has order 3-0-1-2. It just makes things harder than just tacking the received data on the end. 🙂

Add comment