While I have yet to actually implement the full-scale error correction as I want to, I did some manual tests today.

I basically took a track that I was erroring out on, and swapped in 1-bit away values for the bad bytes. In some cases, it appeared to fix the problem.

There’s alot more work to be done on this, but I think it will pay off.

I think it’s probably fair to say that you’re more likely to see a missing bit (or rather a missing flux transition) than see a transition that wasn’t actually there.

Assuming you haven’t already thought of that, perhaps you could incorporate it into your algorithm?

FWIW, I’m working on something broadly similar to your Amiga floppy reader, but for just about any disc format instead of specialising on one specific format. It’s basically a USB-enabled Microchip PIC bolted onto an FPGA — the FPGA reads and writes the transitions (the logic can be summed up as “a huge, messy state machine”) and the PIC tells the FPGA what to do (including loading the microcode from an Atmel Dataflash chip on power-up).

I’m aiming for timing resolution more than anything — the plan is to build something that can not only read or write just about any floppy disc format (MFM, FM, GCR, the list goes on) but also identify the type of copy protection in use (if any) and tell you if a given track has been modified after the disc was mastered (utterly useless for reading old data discs, but good for seeing if the ‘original’ copy of $SOME_OLD_RARE_GAME is really original, and if not, what exactly was changed).

But anyway, enough of my ranting. I’m just reading through some of your past posts — you have some very interesting ideas.

Hi Phil !

I have been looking for a detailed paper on exactly HOW floppy media ends up failing. I’ve read about super-paramagnetic thermal decay — and that’s interesting. While I probably agree with you, do you have a specific rationale behind why you believe that a transition is likely to be seen as no-transition, rather than the other way around?

There are definitely a lot more no-transition bits(0’s) than transition bits(1’s). Given a consistent error rate across the media, doesn’t this mean that there would be more 0’s with errors? Just playing Devil’s Advocate here.

I _have_ been looking for ways of weighing likely possibilities over unlikely possibilities…… Honestly, I think my hamming distance stuff might negate the need to do much more —- (trying not to sound like a broken record here)— I first check valid bytes that are 1-bit off, next check valid bytes that are 2-bits off, and then finally up to 3-bits off. My algorithm doesn’t care whether the missing bits are 1’s and not 0’s. or vice versa.

I’ve been doing a lot of reading on FPGA’s lately. Very interesting devices. If I had more time and money, I’d really like to get some. You using Xilinx or Altera? Verilog/VHDL ? Have you seen the Amiga custom chips done on an FPGA? It’s really awesome.

https://home.hetnet.nl/~weeren001/minimig.html

There are other people attempting to do floppy stuff with FPGA’s around….. I think I have some links on the right hand side of the page….

Your work sounds an awful lot like what the people at SPS and CAPS do. Have you seen their work?

https://www.softpres.org/

How are you detecting changes vs original mastering? Do you run a blog/site etc covering the details? I’d like to read about that! And detecting copy protection? Checking for long-tracks, more than 80 tracks, etc? How are you going about reading the disks? Time between transitions? and do you sample? or edge-detect or ?

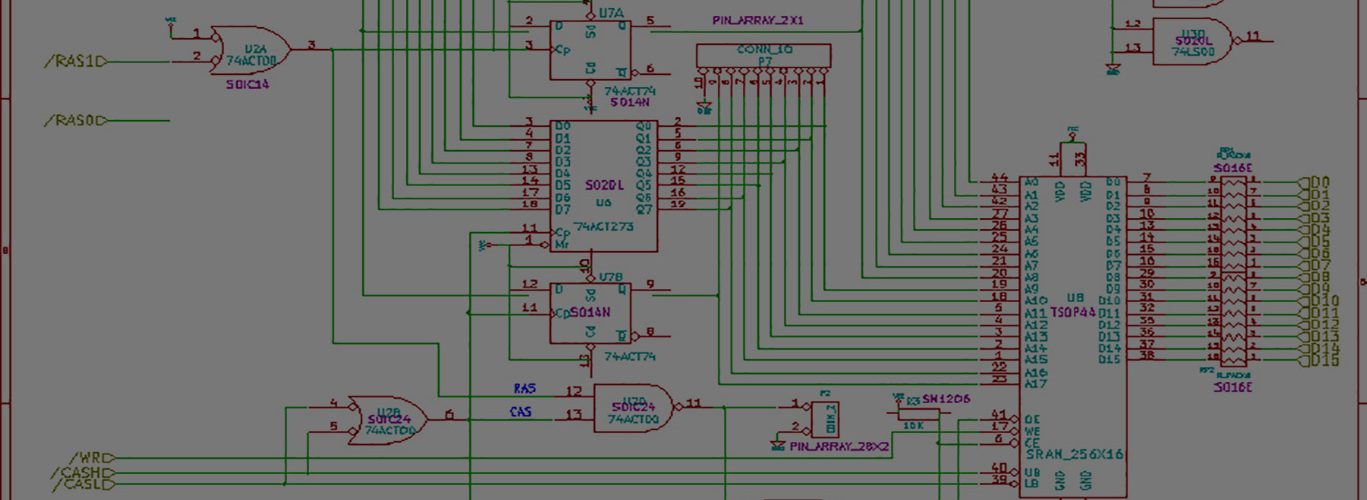

Forgive the ugly hand-drawing, but

https://techtravels.org/?p=151

shows how my overall uC firmware works….. Dual interrupt sources, either interrupt via edge detection (saw a transition, store a 1), or a timeout

occurred (means that a 0 happened)

I struggled to find a working method…… Other people use different ways…. i think Jens @ Individual Computers uses the time BETWEEN transitions as a way of telling what the data is.

Fun fun stuff.

Thanks for the comment and the compliment.

> While I probably agree with you, do you have a specific rationale behind why you believe that a transition is likely to be seen as no-transition, rather than the other way around?

I was thinking along the lines of the magnetic field of the recorded data weakening over time, but there would still be a transition between N-polarised and S-polarised.

I suppose the more likely event is the transition between two bit zones becoming more indistinct, and harder for the head amplifier to detect. Again, you’d see a no-transition but that would be because the transition was too weak to trigger the FDD’s threshold detection circuitry.

> I’ve been doing a lot of reading on FPGA’s lately. Very interesting devices. If I had more time and money, I’d really like to get some. You using Xilinx or Altera? Verilog/VHDL ?

Verilog on Xilinx CPLDs and Altera FPGAs. A bit of an odd choice, but that’s basically because I bought a load of the Xilinx XC9500 and XC9500XL chips (quite a few of them) from Ebay, then found that nobody in the UK sold small quantities of Xilinx FPGAs. My usual supplier had a bunch of Altera Cyclone-IIs, so I bought four of them to play with.

> Have you seen the Amiga custom chips done on an FPGA? It’s really awesome.

Minimig? Yep, I’ve seen it. I’ve been trying to find some good references on what the Amiga floppy controller can actually do (and how to program it), but haven’t found much beyond “it raw-reads, then the CPU decodes the data”.

> Your work sounds an awful lot like what the people at SPS and CAPS do.

I’m actually working with them to make my floppy reader suitable for use as an archiving / preservation tool. Something broadly similar to a Trace duplicator, but built from almost-off-the-shelf parts.

> How are you detecting changes vs original mastering?

By looking out for changes in the read rate. It’s highly unlikely that two drives will have exactly the same rotational speed (mechanical and electrical tolerances are pretty much inescapable). So let’s assume your mastering drive was running at 350RPM (vs 360RPM typical for a 3.5″ disc). If someone then writes a sector on a drive that runs at ~355RPM, then the data rate will increase fractionally relative to the mastered area.

If you assume that nearly all the disc was mastered, and that only a few sectors are likely to have been modified (e.g. high score data), then you have a large sample of one type of data which makes it fairly easy to spot the ‘odd one out’.

> Do you run a blog/site etc covering the details? I’d like to read about that!

https://blog.philpem.me.uk and https://www.philpem.me.uk. Most of the discussion is happening on the Classiccmp mailing list at http://www.classiccmp.org; there isn’t much about it on my blog.

> And detecting copy protection? Checking for long-tracks, more than 80 tracks, etc?

Because it’s reading from either an Index Pulse to another Index Pulse, or from an MFM SYNC word (which is custom programmable — Sync A1 aka 0x4489, or indeed any MFM pattern is valid) to an index or sync word, you’ve got the entire physical track. A bit of data processing will tell you if the track is a long-track or just noise or a blank track. The data timing graphs are a total giveaway…

There are a few articles about this type of analysis on the SPS’s website.

> How are you going about reading the disks? Time between transitions? and do you sample? or edge-detect or ?

Edge-detect, with an internal 15-bit 40MHz timer/counter to do time-between-transitions measurement. Resolution is circa 25 nanoseconds (a Trace duplicator does 50ns), and 4Msamples of SDRAM basically means you can store an entire track at the maximum resolution. The counter locks at 0x7FFF if the timer overflows.

The Index pulse state is stored in the MSbit of the data word.

I’m thinking about adding an option to track the disc’s current rotational position for each transition as well. That way you can figure out that the disc was running – say – 2% too slow for part of the track and 1% fast for the rest of it, and adjust the timing values in software to match up. That is to say, you eliminate any error caused by your particular disc drive’s spindle motor varying in speed.

> I struggled to find a working method…… Other people use different ways…. i think Jens @ Individual Computers uses the time BETWEEN transitions as a way of telling what the data is.

Oh, the Catweasel. It wouldn’t be quite so bad if he’d actually used a fairly sane clock rate (an integer multiple of the data rate).

My design goals are/were:

– Low cost — no sense in making something nobody can afford.

– Small, light and easy to carry around — if you’ve got the last remaining copy of XYZ123/OS 3.14159, you’re hardly going to want to let it out of your sight… being able to grab a laptop, the reader and a power supply and visit the owner, image the discs and leave would be tremendously useful.

– Reliable, easy to repair — no weird parts that can’t be substituted out with something else!

– USB interface — fast (ish) and standard.

– Open-source design and software

– Cross-platform — Linux, Windows, Mac OS X.

– High accuracy — at least as good as a Trace duplicator, if not better.

– Read any standard disc, and most copy-protected discs, including protection detection/analysis — like Copy II PC, but far more capable.

Fun fun stuff indeed.

>I suppose the more likely event is the transition between two bit zones becoming more indistinct

Sure. And the magnetic avalanche effect doesn’t help once you get some atoms that have switched/lost polarization.

>I’ve been trying to find some good references on what the Amiga floppy controller can actually do (and how to program it), but haven’t found much beyond “it raw-reads, then the CPU decodes the data”.

There really isn’t an FDC per se. There really is no hardware, like the 765/8272….90% of it is handled in software(assembly in the OS). The actual hardware that _is_ used isn’t floppy-specific hardware. For instance, the blitter chip is used to encode/decode the MFM. The blitter is normally used for graphics. There is some generic I/O hardware, and the amiga has the ability to sync the datastream on a syncword.

It’s not like you program a control register, or query a status register for sector number, pin states, etc.

The flexibility of doing things in software makes it so that the Amiga can read/write many different disk formats, like IBM, MAC, and so on. This is also why you see some goofy copy-protection things on the Amiga….As long as they don’t break compatibility (they sometimes did) or physically break the drive (by stepping too far, etc) — the software people could do whatever they wanted.

Very neat how you detect changes by looking at the read rate.

I figured you might have some association with SPS/CAPS given the type of work you were doing. I’ve emailed them in the past a few times and have been completely ignored by them. Most of their articles, etc, are too high level — I don’t see many getting down and dirty.

Thanks